|

|

|

Recorded and edited by second interview April 1, 1999 |

|

SS We ended last time when you had gone to Germany and you were in Ken Holmes' lab and you were telling me about Gerd Rosenbaum

SCH Let me just for about 2 minutes summarize a couple of things in case they got lost in the earlier text. Up until now in thinking about spherical virus structure and concepts that might be useful either for structural biologists generally or for virologists, there were in my own work two things that had happened:

First from small angle scattering we had tried to make some conclusions about the distribution of RNA and protein and in retrospect we kept getting them sort of alternately right and wrong. One important one was that from the small angle X-ray scattering spherically averaged electron density, the density inside of about 90 angstroms was a certain level, between 90 and 110 angstroms it was a much higher level, and then outside was again the lower level and then beyond about 160 angstroms radius, it fell off. We knew from the EM that was the outside of the virus. So that was interpreted as saying the RNA was concentrated between two protein shells and at one point in my thesis I actually described a possible model in which there was a core made up of a T=1 assembly of the protein subunit and an outer shell made up of a T=3 assembly. That was hinted at - it was wrong but one reason it seemed plausible was one knew that you could get a T=1 assembly of the proteins. Indeed we knew at that time from polyheads and other things that viral protein subunits made other kinds of assemblies than the ones they were supposed to when they were under some special conditions and so we thought maybe the T=1 particle - and Aaron Klug actually thought that from some electron microscopy - that the T=1 particle was actually a core. Now, much later we realized, but only after the structure, that the T=1 particle was only made when you cleaved the arm off the subunit and the assembly was unregulated. But at any rate because of what was known from EM already in 1965 there were stages in which one had the wrong interpretation and one thing I always tried to do, and I don't know whether I succeeded, was in the introduction to a paper that came later try explicitly to say what was wrong, or what we tried to disprove, or what we thought the new results disproved from an earlier interpretation.

Incidentally, there is a funny way in which there probably is a higher concentration of protein inside a region of high RNA concentration, now that we know there is a long N-terminal arm of the protein that reaches inward. In other words, there probably is more protein below 90 angstroms than between 90 and 110 and that does explain the density, but it is protein reaching in. When we were originally thinking about it, the notion, and that's one thing we'll get to later in terms of general notions in structural biology, the notion that a protein might have a long extended arm that was disordered and would wrap in different ways was total heresy. By the time we realized that that was true - in a funny way it had less impact, except on me, for reasons that I couldn't understand but it has taken a long time for people to get used to the idea that proteins might have long floppy extended regions. Even now with structural genomics, I think people are ignoring that, but often the most interesting part of a protein is something that may fold in different ways in contingent situations.

So here we are now in 1971, and the first thing then was the radial distribution of density and we certainly got the density right. But as I say we had various interpretations of why it had that pattern at various times and when one looks into the papers one will see that for awhile I thought it was highly hydrated RNA in the middle and less hydrated RNA between 90 and 110 angstroms and so on.

One thing that made it clear that there was no distinct protein core was - and this is an interesting historical aside - the determination of the proper molecular weight of the subunit. SDS gels came in probably about 1968 or '69. I don't remember exactly, but during the period that I was in Don Caspar's lab and, as you probably remember, the popularization of the technique was the realization that you could measure polypeptide chain molecular weights well by this method. That was due to Klaus Weber but the method had been introduced by Jake Maizel earlier in studies of polio virus and Klaus and J. Rosenbusch, who was then his student or postdoc, came to me and said "we better do bushy stunt because you guys actually don't know the molecular weight well enough to know whether there are 180 or 240 copies in the virus particle". I had measured the virus particle molecular weight quite carefully by sedimentation diffusion, so we knew the molecular weight of the virus particle, and we knew the molecular weight of the RNA from physical chemical measurements - certainly not sequencing which came in only much later. So we had to know the molecular weight of the protein which was not well determined by physical chemical measurements for, in retrospect, dozens of reasons, not just some of the uncertainties we thought were true at the time. So SDS gels were the way to clear it all up, and sure enough when we found out what the size was, about 40,000, then it became clear there could only be 180 copies in the particle, so there could not be a distinct core. And at this point one had to go and reinterpret our thinking about what the inside is. I think where I put that was in the Cold Spring Harbor paper, where I said that maybe it is more highly hydrated RNA inside than between 90 and 110 angstroms, but of course didn't think of the notion that some part of the 180 subunit could actually reach inside. That seemed bizarre.

SS It seems so obvious now.

SCH It seems so obvious now. Roger Kornberg and I had a long discussion of that with regard to histones, because histones have arms that reach around DNA in nucleosomes, and that was actually proposed by people. Roger thought it was crazy because there was no example of it. This happened somewhat later and so he wrote into a review article that this flew in the face of everything we knew about protein structure, and he did that in early '77. It was in an Annual Review article, as I recall. He was then living in Boston, and we saw a lot of each other. And then, when actually later in '77 we realized that that was exactly the case from seeing the arms in the high resolution map, I remember my very first reaction was "Oh my god, Roger will hate me" because there had been something of a debate, not acrimonious exactly, but a sort of somewhat intense debate between him and other people in the histone field about whether histones would have arms or not. And what he had written into his review was pretty categorical saying something like - it goes against everything we know about protein structure, or it seemed preposterous. I don't know if he used the word preposterous, but he certainly did in private conversation.

The following is what Roger Kornberg wrote:

"Histones H2A, H2B, H3 and H4 are often described as having extended NH2-terminal regions that protrude from the central part of the nucleosome and reach, like "arms", "tails" or "fingers", around the DNA. This view of histone conformation can be questioned; it represents a departure from established principles of protein structure that is not required by the evidence." And then he continued: "Even if the existence of extended NH2-termini could be demonstrated for histones in solution, it would not necessarily follow that the NH2-termini remain extended for histones bound to DNA in chromatin. In many instances where a degree of conformational mobility has been found for enzymes, it is suppressed by the binding of substrate." (Ann Rev. Biochem 46:931-954,1977)

SCH Then, to continue summarizing:

The second point is that by 1971 one had an approximate solution to the phase problem, from being pretty sure one had found the sites of a platinum derivative. This was based on what I can later elaborate upon but technically would be described as follows: phases were derived (1) from the study of the small angle x-ray pattern (the spherically symmetric information) plus (2) from inspection of icosahedral harmonics, having worked out what the major feature of the particle at the lowest resolution was - namely what we now know are the projecting domains, but at any rate 90 lumps at the true and quasi dyads of the T=3 lattice. Knowing that it was clear how to phase a major component that was due to the eighteenth and twentieth order icosahedral harmonic (literally I did that sort of by hand) allowed me to use that information to get a difference Fourier, which I thought was telling me where the platinum derivative was, and sure enough later that proved to be right. And through the interactive computing that I mentioned in the last interview in Ken Holmes' lab, I was able to play around with that solution so to speak, and by the end of the year was convinced that it was right from sensible sorts of crystallographic criteria.

SS Before we go on, I want to ask you - how dependent were you on other X-ray work? What fields of science were you building on at this stage when you talked about doing the structure? Most biologists weren't thinking about this kind of structure.

SCH The community I was trying to learn from and interact with was largely what was then called protein crystallography, what we might now call structural biology. The literature I was reading for the technical side of things and that was almost all of my problem - I knew I still wasn't saying anything of interest or very little to biologists - was very much the literature of methods in protein crystallography.

SS So people in protein crystallography were already thinking - well I guess Don Caspar was thinking about large scale structures.

SCH Yes, viruses had been crystallized in the 30's by Bawden and Pirie and the very first X-ray diffraction pattern of a virus crystal was taken by Bernal and Fankuchen in the late '30's and published in '41, but it was immediately evident that one could do almost nothing with it since indeed at the time one could do almost nothing with the hemoglobin crystals.

SS I was wondering whether it almost would be geometry - mathematical geometry - that would be helping the crystallographers to think about these structures in terms of icosahedrons.

SCH Well, Don Caspar and Aaron Klug had certainly built geometrical models from which they deduced the T-number series - that is the possible subtriangulations of an icosahedral lattice - the so-called icosahedral harmonics that I described are in a way very closely related to the subtriangulation series. There are different ways in group theory of formulating the problem of how you arrive at icosahedrally symmetric patterns on the surface of a sphere. And one could be thought to be a sort of geometric analysis of some of the restrictions, and the icosahedral harmonic analysis is literally a differential-equations type analysis or a continuous-function analysis of what sorts of so-called orthagonal functions or basis functions you can decompose an icosahedrally symmetric structure into. The notions are closely related.

SS Now we're back in Germany.

SCH So we're back in Germany. I mentioned Gerd Rosenbaum because he had spearheaded the synchrotron effort. He was a physicist or an engineering physicist, a graduate Ph.D. student of Ken Holmes, who was particularly talented at automation and servomotors and such, which were necessary at synchrotron installations because, of course, you couldn't work when the beam was on. That was something pretty new to us, that you couldn't line up your crystal in the X-ray beam, and you had to figure out ways to do all this by remote control, and the remote control aspect at the time was very challenging. We take it for granted, because computer control is so easy now, but at the time it was actually quite a mess, and a lot of serious machine shop work went into this, and whole trips to the synchrotron were just to test a given motor and its control business.

This was 1971, an awkward time for an American to be in Europe, actually - a young American - because you were constantly being challenged about the Vietnam war. Indeed I went to Finland for a meeting that was organized by people I had never heard of. They turned out to be Kai Simons and Ari Helenius, then Kai's graduate student, and others from the Institute of Leevi Kaariainen. I got fogged in in Frankfort all day and so arrived late and very, very tired and was taken two hours from Helsinki to where this meeting was. It was in a sort of remote research station and was immediately told I had to go to the sauna, a small room filled with naked men swatting each other with birch swatches. And in the midst of all this - I was headachy and exhausted and no food, and shoved into a small closet with all these people busy flagellating each other - somebody turned to me and said: "and what have you done about the Vietnam war?". That turned out to be Kai (Simons). Fortunately I had done something about the Vietnam war and I described in great detail my participation in the anti war movement which, I think, shut him up.

SS So let's go back to tomato bushy stunt. We're now in 1972.

SCH Right. So I came back to Harvard and the first goal, which I now knew was feasible, was to get good 3-dimensional data to some intermediate resolution, initially 5.5 angstroms (I'll tell you why that particular initial goal) and to extend the phase information that I was pretty sure we did indeed have from the determination of the positions of the platinum derivative. So I set up the lab. It was clear we had now solved the problem of how to get decent data.

SS Who were we at this stage?

SCH I, as a graduate student and post doc, and then I say "we", I guess, because the community sort of took up on the work to some extent, although probably it is still fair to say "I". Then "we" quickly became a small group that joined my laboratory, in the standard fashion. A postdoc named Tony Jack, whom I'll get to in a minute - he had worked on non crystallographic symmetry mostly computationally as a graduate student with David Blow. He was in a position next in line after Tony Crowther. There was Crowther, Jack and Bricogne who developed with David Blow the theory and computation of non crystallographic symmetry. And you have already talked to Michael Rossmann who was independently - having moved to Purdue - developing his own computational applications of the original perception by Rossmann and Blow that non crystallographic symmetry would help you solve the phase problem.

Tony (Jack) joined, and then a graduate student in applied math came by to talk to me named Clarence Schutt who didn't use his first name and announced to me that he was called "Schutt" or he preferred "the Schutt". And he became "Schutt". He's quite a character. He's now at Princeton. He developed quite a reputation as a humorous character. He was a terrific cheer leader for the lab in any case and helped make the place a good place to work, since I'm rather intense and didn't realize at the time how important it was to work on the morale side of things in organizing a little research group. By the end of the first year, a woman named Micheline McCarthy came and worked on Sindbis virus. And also a sort of senior postdoc - a little bit more like a sabbatical visitor though he came for a couple of years from Finland - arrived called Carl Henrik von Bonsdorff, who also worked on Sindbis virus.

Carl Henrik ultimately was the first person to show that there was a T=4 icosahedral lattice of the glycoprotein (of Sindbis virus), and the micrographs were really easy to read by both freeze-fracturing and negative stain. That got then rediscovered by the EMBL group with much better micrographs much later, claiming that ours hadn't actually proved the point which indeed they had. They were quite clear! So his accomplishment, I think, was to show that there were defined lateral interactions between glycoproteins in the membrane. We knew by then from the work of Simon and Helenius and their collaborators that the glycoproteins would have a membrane anchor - a transmembrane segment. The glycoproteins could form regular lateral interactions and so there was an issue of congruence of those interactions with the internal interactions and how that would contribute to the stability of budding. At any rate Carl Henrik also brought cell culture technology with him. He actually brought a technician with him for about 6 months named Rittva Saarlemmo, who wanted to learn English and her lab had arranged to send her out with him for 6 months. She arrived and turned out to be extremely attractive and suddenly there was a flurry of attention on Rittva around the Gibbs laboratory, but that's another story.

Back to the question of who was "we": in terms of collecting data accurately and so on, within a year I guess Schutt and me, but in 1972 it was still me, probably. At any rate, the problem of data collection was solved and the problem of adequate X-ray intensity was solved from the new generation of rotating anode generators. The development of the engineering technology in those generators was due in no small part to the work of Ken Holmes when he was still at the MRC. He had interacted closely with the engineers at whatever the company was called then that was developing what is now made by Nonius. (I guess it was already Elliott rotating anode generators). That coupled with the mirror optics was clearly what would be adequate to get good data. I set up such a generator, which I had gotten from my first NIH grant, and set up appropriate optics on it. By now instead of precession, one was starting to use a method of data collection called rotation or oscillation. This is actually an old method, but one which gives a pattern that without an automatic computer is rather difficult to interpret but is much richer and more efficient for data collection, optimally efficient and which came back once automatic film scanning was available. I told you that Don (Wiley) and I developed programs for the automatic film scanners. The film scanner we used was Lipscomb's film scanner upstairs, which by now was - the law suit had been settled - the same scanner we had previously programmed for. The only problem was, we were only allowed to use it on Thursdays and Saturdays and they tended to call the service on Thursdays, which it needed not all that infrequently - so it was a source of some tension that the instrument key to us was accessible to us only on a rather awkward schedule. Fortunately if you looked at an X-ray film you could tell whether it contained good data or not, so that as long as you got adequate time every week you could evaluate all the data. Meanwhile what was becoming increasingly clear, and one reason that Tony Jack had chosen to come to my lab, was that the application of the non-crystallographic symmetry theory, and its computational implementation, was going to be critical to solve these sorts of structures. It was actually one of the reasons why Michael Rossmann got into working on viruses in the first place. I think even in their very first paper they might have said that this is probably how you would solve virus structures. The notion that virus structures were something important - that they were sort of the Mount Everest of crystallography - had been around since Bernal and Fankuchen, basically. Then, with the realization from Crick and Watson and Caspar and Klug about the icosahedral symmetry - the notion that non-crystallographic symmetry theory and computing were going to be the way to actually get an answer was perfectly evident, and it became conventional wisdom. So the very first thing Tony Jack and I did was formally to take the 3D reconstruction from micrographs and use that in an actual computationally rigorous way to phase the crystallographic data and actually get much better phases, which we could then improve by the non-crystallographic symmetry method that he had developed as a student and programmed. So by '73 or so we had actually taken rigorous phases from the micrographs or, if you wish, more rigorous phases, computed rather than just derived by my looking at images and saying therefore these icosahedral harmonics have to have such and such a phase. Thus, we did the phasing by methods one uses now, effectively what would be called "molecular replacement". We used those phases to locate the platinum derivatives and to confirm what I had worked out in Heidelberg. We then also used the phases to find a mercury derivative, used the mercury and platinum derivatives together to have double isomorphic molecular replacement phases, improve those phases by non crystallographic symmetry computation and calculate, by 1974 or so, a 16 angstrom map that we published in JMB together with another paper, in which we described how we used the electron microscopy to get phases in the first place.

SS At that level could you see much more? Could you get any more clues to the virus structure?

SCH In retrospect very little. We tried to interpret it in terms of the distribution of RNA and protein and some issues of quasi-equivalence, but we did actually learn one important thing that we put in there. And that was what the small particles must be, because we could see that the packing of the proteins was such that the packing around the 5-fold axis was just what you would expect for a simple T=1 small icosahedral particle and that therefore what had to be going on was that the packing across a quasi-2 fold and the packing across a true 2-fold of subunits had to be distinctly different. If you wish there was higher curvature in the one than in the other, and what that means ultimately is that there is a distinct switching between two states of the dimer of the protein subunits. I don't think we thought of it quite that way or could have said it that way. But the notion that you have distinct switching between two packing states of the protein subunits: one represented by what we now call the A and B subunit conformations to either side of a local 2-fold and what we now call the C conformation to either side of the true dyad. Those were somehow distinct; the fact that there was a binary switch, so to speak, was clear. I don't think we said it very well at the time - because we wouldn't have known how to think about it - but that conclusion was clear in our minds and so when we actually solved the 5.5 angstrom structure a couple of years later or the high resolution structure, the issue of that switching was the way we looked at the electron density map to begin with, or looked at the structure to begin with. So there was in the sense of the structural biology rather than the virology of the problem, an important conclusion that you could see at 16 angstroms. Actually Don Caspar had anticipated this from detailed looking at the electron micrographs much earlier, and a styrofoam ball model that's in our Cold Spring Harbor Symposium paper actually embodied the whole notion.

SS So now there is a 16 angstroms structure.

SCH So we're at 16 angstroms. Why did we actually set 5.5 angstrom as our first really important goal? The myoglobin work had stopped at 5.5 angstroms initially (that was the "visceral" picture), and the reason is that 5.5 angstroms represents a kind of natural intermediate point for a protein structure. Issues of secondary structure and its packing can be seen at 5 to 6 angstroms - and then information beyond there starts to involve individual residues and side chain packings. You can see that. Kendrew knew that before he had seen anything from the fall-off of mean intensity with resolution. There is a major peak, if you actually plot mean intensity as a function of resolution, around 10 angstroms, which has to do with the mean diameter and packing of alpha helices; then there is a minimum around 5.5 to 6 angstroms and another peak around 4.5 angstroms, which is side chain packing, basically carbon methyl-methyl- methylene-methylene packing. So, it was clear to crystallographers, even before we knew any protein structure, that there were distinct regimes of information and that's why historically 5.5 angstroms had been set as a stopping point. That's pretty well gone away, and one goes straight for high resolution structures these days. But at the time - where so much work and time was involved - it was fully appropriate to stop at 5.5 and indeed to publish, even in major journals, the 5.5 angstrom structure and to describe what things you could conclude, before going on. Of course for most laboratories, each stage was the work of a different post doc or graduate student and that became true in my lab also. Some of the people, like Schutt who worked on the project initially, were part of the 5.5 angstrom work because of the methodological advances he made. There is also the tradition of trying to include people who have worked on earlier stages in later papers even if they have left the laboratory since so much work went into these things. I've tended to respect that tradition, and I have tried even in an era when things happen much faster, to make sure that if I feel that what somebody contributed, even in an earlier stage, to the way one did things or what one did at a later stage, was important, to include them as authors on a later publication.

SS At the 5.5 angstrom structure - what did you see now?

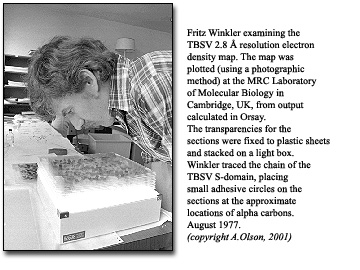

SCH At 5.5 angstroms we saw a lot. In order to move on to 5.5 angstroms we now had properly to use the so-called oscillation or rotation method. The 16 angstrom structure was actually entirely based on the old fashioned precession way of collecting data. In order to go to 5.5 angstroms, we had to really develop the oscillation method. That was being developed by others in England, especially by Uli Arndt and Alan Wonnacott. We had to do all our own programming in collaboration with people in Lipscomb's lab who had actually set up the outlines of the program - as I remember called Scan 12. I think Scan 11 was the precession version and Scan 12 was the oscillation version, both of which were descendants of the program outline that Don Wiley had set up, and that I had worked on with him when we were students. He had certainly pioneered them; I was more a second collaborator than an initiator of the algorithmic outline of these programs. I think he had worked out the outline in close collaboration with the people at the company building the machine. A key issue had to do with how you treat what are known as partial reflections. In the oscillation method you rock the crystal back and forth in the beam over an angle of a half a degree or a degree, recording X-ray diffraction as you go, and when you turn around, some of the spots would not be completely recorded, because you would have to rock a little bit further in order to get all the intensity from that diffraction. Some work that Schutt and Fritz Winkler did - Fritz Winkler had by now come from Switzerland to join the lab - used diffraction theory in significant and imaginative detail to work out how to treat the partial reflections properly and in particular how to treat the partial reflections so you could correct them. What other people were doing was then recording another photograph from the very same crystal for the next oscillation range, so that you could add the end partials from one and the beginning partials from the next. We couldn't do that with the virus crystals because they died too quickly - we could get only one picture - so if you were going to use the partial reflections you were going to have to correct them for their degree of partialness. Moreover, we realized, especially at high resolution, almost all of our reflections would be partial, because the crystals were so sensitive that we couldn't rock through a large enough angle not to have most of the reflections on the edge.

SS At this stage was the work being done at Harvard?

SCH All the work being done at Harvard - rather than where?

SS At a synchrotron

SCH The entire structure was done on conventional sources. Synchrotrons were slowly being developed, but they were not routinely useful yet and didn't become so until late in the 70's or early 80's, so that all the early work was done entirely on conventional sources: southern bean mosaic virus and polio were done entirely on a conventional source. For rhinovirus, even the first structure was on the synchrotron. Indeed, CHESS got a lot of publicity on that first virus structure.

The method for treating partial reflections was absolutely critical for getting on with the issue of how you were going to get decent data from crystals that had large unit cells and that were labile - for which you could only get a small amount of data from one crystal. Schutt and Fritz Winkler really worked that out themselves. It was an important contribution in Schutt's Ph.D. thesis. The method is now called postrefinement, actually Schutt called it that. It was a refinement calculation that came after evaluation of the intensities in the film, whereas what we had called orientation refinement up to then had to do with some calculations that were done, because of the way the programs worked, before actually integrating intensities, and that funny name (postrefinement) has stuck. It's still called postrefinement even though I think nobody knows what it means anymore, because you do the computations rather differently now.

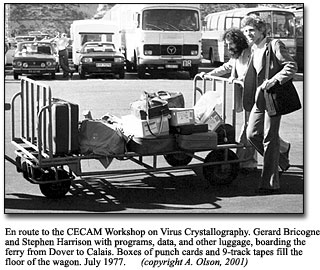

There continued, therefore, to be important work in diffraction theory, and computation and writing of programs on the issue of how to get adequate data, and as I said there now remained the issue of how to turn those adequate data into adequate phases. And just at this moment, the right thing happened. I learned that David Blow's next-generation graduate student, Gerard Bricogne, who had overlapped a little bit with Tony Jack, had really made a major breakthrough in the business. He was a French mathematician, who came as a graduate student to Cambridge. He knew enough mathematics and computation theory to realize that the way we and everybody else were trying to do the noncrystallographic symmetry calculations was essentially computationally unfeasible at any sensible resolution but that a mathematically fully equivalent computation, done in direct space rather than reciprocal space, was computationally totally feasible, because of algorithms like the Fast Fourier Transform, which scaled more like the number of data that went into them (the number of reflections) rather than like the square of the number of reflections. So the calculation got bigger in a manageable way instead of blowing up into a computation that was way beyond what any computer available at the time or in the foreseeable future could do. The calculation was clearly doable. Moreover by 1975, Gerard had written a beautiful suite of programs, in retrospect one of the most beautiful suites of programs ever written in crystallography, I think, to carry out these computations. They were models and still are in my view - models of programming clarity. You didn't really need to read his papers, which were so mathematically abstruse that indeed almost no one had read them. I did read them, and the limited amount I understood was sufficient for me to realize what to do. But the programs themselves were so beautifully written that just reading the comments in the programs was sufficient to be able to understand what was going on. And there was a very clever algorithmic development called a "double sort", which was necessary in order to fit the computation into the computers at that time. One does the computations differently now because the issues are different. The limitations of input/output versus available memory are just totally different in modern computers. At that time, though, they overcame some very important limiting issues. Gerard actually wrote to me and suggested that our problem, which he had heard about presumably through Tony Jack's grapevine, was getting to a stage where perhaps a collaboration was in order, since he had written these programs. I leapt at that and invited him to come spend January '76 in my lab and get the programs installed. So he did indeed arrive sometime early in the New Year (in January). It was an unbelievably cold January, one of those January's when it never goes above freezing for most of the month.

SS If this is somebody from England, the fact that there was central heating might have helped.

SCH All I remember is I burned out a starter motor in my car trying to get it started.

The original notion was just to get the programs installed, and then we would slowly figure out how to use them. But within a few days, he and I both realized that we could probably get the whole computation done and get a 5.5 angstrom map calculated in 2 to 3 weeks of very hard work. I knew I had to start teaching on the first of February, a physical chemistry course that Bill Eaton was coming up to join me to teach, so we had about 3 weeks (as I remember) to get all this done, and we managed to do so by more or less working around the clock. The computations were actually being carried out on a computer in New York, at Columbia University, but fortunately there were not "computer girls" flying back and forth anymore. There was a telephone hookup to a teletype, of course, not a terminal, a horribly noisy teletype.

SS Must have taken a long time too.

SCH But the turnaround wasn't so terrible. The Columbia University Computer Center was an important regional center, and the availability of disc space was substantial. From working closely with Gerard, I finally learned a lot about issues of allocation of disc space and memory and other aspects of doing computing, at the edge of what you could possibly do. By the first of February, we had a map. Turning that map into something you could look at was no joke, and that took a good 6 weeks of contouring by hand. We printed the map out on a line printer using a standard method in which you chose a set of symbols that were denser and denser in their blackness to the eye, and then we contoured between the various levels defined by those symbols onto clear plastic and stacked the whole thing up. We got huge cardboard frames from an architectural supply firm and spread thin mylar across them and contoured used a Rapidograph pen (or a relatively fine pen) onto the mylar. We had sections of an angstrom each, if I remember.

SS How did you do this while you were teaching?

SCH Well, we actually hired an undergraduate who helped with it. It was sort of a communal effort. Everybody would do a little contouring, and whenever I had a few moments I would come in and contour. We could only get about a section done in a day. But the map was stacked up by sometime in March, because I did get to go - we'll come to this - to a crystallography meeting in Erice to talk about the structure. While we were working all these long nights, Bricogne and I, we became friends and also started to talk about how the hell we would do this at high resolution. It was immediately in our minds that we better go on right away to high resolution - meaning in my mind about 2.8 or 2.9 angstroms - partly that had to do with the available crystal-to-film distances and limitations imposed by the size of film that was available - the edge of the film came out to 2.9 angstroms. I knew how you would get the data: there would be 1/2 degree oscillations. We had solved the problem of how to deal with partial spots, so reprogramming of the data processing programs was needed, but Fritz Winkler got that done before he left to go back to Switzerland in June or July. So we knew already when we were finishing the 5.5 angstrom calculation how we would go on. The only problem was getting a computer big enough to actually do it. Gerard didn't have any problem thinking about how to expand the program. It was really a matter of where a computer with enough available disc space and so on could be had. He thought that the Cambridge University (England) Computer Center computer wouldn't work, and you used to pay with real grant dollars for cpu time then, and so we also had to figure out where would be affordable. We didn't even look into whether Columbia (NY) would be good enough. I think it wasn't, although we didn't know how it would expand in a year. I also couldn't possibly afford it. But Gerard knew of an organization in Paris called CECAM (Center European Atomique Moleculaire) where he had been for a summer workshop a year or two before.This center, directed by an American expatriate named Carl Moser, was in the business basically of organizing summer computing workshops at a huge interregional computer center at Orsay and giving away a lot of computer time.

The

French go away in July and August, so particularly after July 14, this enormous

computing center was completely unused. So CECAM's purpose was to give away,

to American and non-French Europeans who were willing to work in July and August,

gobs of wonderful computing time. That wasn't its stated purpose, but that in

practice was what it was about. That organization played a big role in development

of molecular dynamics, the things that Martin Karplus and others became well

known for in computational chemistry. I know that Gerard and I figured out,

already before he left in February, that we would have to make a proposal to

CECAM for a workshop in the summer of '77 to do these calculations, if I could

get all the data by then.

The

French go away in July and August, so particularly after July 14, this enormous

computing center was completely unused. So CECAM's purpose was to give away,

to American and non-French Europeans who were willing to work in July and August,

gobs of wonderful computing time. That wasn't its stated purpose, but that in

practice was what it was about. That organization played a big role in development

of molecular dynamics, the things that Martin Karplus and others became well

known for in computational chemistry. I know that Gerard and I figured out,

already before he left in February, that we would have to make a proposal to

CECAM for a workshop in the summer of '77 to do these calculations, if I could

get all the data by then.

SS So this would be over a year away?

SCH yes - that would be a year and a half away from when we were having the discussion. But a year and a half was a very short time for solving structures in those days, so that put an incredible deadline for me. I was going to have to really work hard to be able to get all the data. Anyway, probably the next thing to talk about is the 5.5 angstrom map and what we concluded about virus structure from it.

From a structure at this resolution you obviously couldn’t build a model. Indeed, how you presented the results was something of a problem, because you had maps on sections, and you could photograph those peering through a number of sections. We did that in the paper or in the slides that I presented, but that wasn’t a very satisfactory way of trying to give a 3-dimensional impression, since there were no 3-dimensional computer graphics. How do you go from looking at these maps to saying anything? The traditional way with small proteins was balsa wood models - "intestinal" models of myoglobin kind - and they were very useful and informative for showing in the early stages some gross aspects of protein architecture. The instant proteins got more complicated than myoglobin, that is they were not all helical, then actually the balsa wood models weren’t very useful. Beta sheets or complex packing of helices on sheets turned out not to be very easy to see or even to recognize from balsa wood models. So balsa wood models quickly became symbols more than anything else. They were representations of the fact that you had reached a certain stage, and the figures told you that your model had to be right to a certain accuracy, but it wasn’t very easy to conclude very much from them. Anyhow to build a balsa wood model of this enormous structure was clearly out of the question. Ultimately, I just made sketches, which went into the paper, about what we thought.

The most important conclusion we could come to was that the subunits folded into two clear domains: we couldn’t know yet, and didn't know until 2 years later, that those domains comprised only about 2/3's of the total polypeptide chain, because issues of exactly where the boundaries were and what the volume would be were not so clear. We were unable to come to any conclusions about arms penetrating inwards. Indeed, we got things wrong in a certain sense as I will tell you in a minute. We could clearly see that the protein folded into two domains. The projecting domain was especially clear, and we could see that it was a beta sandwich of some kind from the curvature of its walls, so we knew that the dimer clustering we had seen in the electron micrographs and in the low resolution X-ray maps was due to these projecting domains. We estimated, probably reasonably, about how many residues went into those, but we certainly didn't know. I think we had no idea which end was the C-terminus and which the N-terminus.

SS Was the sequence of the protein known then?

SCH The sequence was not known and what we could see was that there were 2 conformations of the protein involving a hinge rotation between the two domains. Now the notion of domains and hinges had entered into structural biology - at least in my recollection - in a firm way through the immunoglobulins. The early immunoglobulin structures showed clear segregation into domains and showed that the hinge regions were indeed hinges in some sense, but one hadn’t actually seen two clear states of the same hinge as I recall except in this (TBSV) example. Historically, I don’t think the structure had a huge impact in that regard but certainly in my own thinking it made a big difference to see a very clear hinge and to know that hinge motion was an important way in which a protein could be adaptable. Remember that the notion of quasi-equivalence had involved adaptability of the same protein to somewhat different packing environments or adopting of different conformations in different contexts. And part of our goal was to figure out how a protein in real life could achieve that flexibility. So for me, at any rate, the conclusion that domains with hinges between could yield radically different shapes while conserving bonding was clearly shown in the bushy stunt structure. Indeed, it was perfectly evident in the map that the projecting domains made exactly the same sorts of contacts - whether across a quasi dyad or true dyad - but that because of the hinge difference, as I said earlier, there was a high curvature of the effective packing across a quasi dyad and relatively little curvature across a true dyad.

The other totally striking thing that was immediately evident was that there was a stretch of density that we now know to be the folded-up arm of the C subunits that was present only between the C subunits and not between the A and B subunits or what we now call C and A and B. And obviously we thought that might be RNA, because the notion that you have a segment disordered on one conformation and ordered on another was again not very much in our minds. Moreover, there was another important misconception that I don’t think we got out of our minds for quite a while. I didn't get it out of my mind until much later and I think it isn’t yet out of many people’s minds. We believed that the way RNA would be specifically packaged was through a short groove for a recognition site on the inward facing surface of the subunit; we imagined that this groove would accept a bit of RNA in some defined way and that the RNA would then loop in a highly variable way across to another subunit. The RNA has a unique sequence. It will have secondary structure in totally non repeating patterns. One already knew from the secondary structure diagrams from Fiers' sequence of the RNA phage and other RNA sequences that RNA would have highly variable stem loops. A given viral genome would have all kinds of non regularly arranged stem-loop structures - the tRNA structure had been done by this time. But our thought was that there would be little segments of RNA that would fit in an exact way into some sort of groove on the inward facing surface of the protein and that at least some of those interactions would be sequence specific and they were would be, if you wish, origins of assembly. From the TMV origin of assembly work in the late 60’s and early 70’s, the concept of an origin of assembly was very much there, a packaging sequence or whatever. So the notion was that some bit of the RNA would be segmentally ordered and the rest would then run from one little bit of ordered RNA in some less regular way. So we were very much looking for small pieces of ordered RNA and quite convinced we would find them. So obviously a prime candidate was this one stretch of density that had no quasi-equivalent partner, and we actually proposed in the paper that it was RNA. It was actually very beautiful. It would have been perfect. It would serve as a switch, if you wish, for the two different conformations, so the RNA would be switching the assembly in just the way you would want and would dictate whether you had the flat packing or the curved packing and so on. So one of the total surprises, initially it was a grotesque disappointment, in the high resolution structure was that this feature wasn’t RNA. It was a folded arm, but we’ll come to that. In fact something like what we thought we were seeing is indeed happening in Flock House virus, that is, there is some ordered RNA associated with the arm. Flock House virus has basically the bushy stunt structure. We’ll get to the fact that most of the T=3 positive stranded RNA viruses have essentially the same structure, with a folded arm and so on, but each one does things a little differently. In the case of Flock House and black beetle viruses, the so-called nodaviruses, which Jack Johnson has studied in a very pretty way, there is both an ordered arm and some ordered RNA associated with it along the same line between two 3-fold axes across the dyad that has the ordered arms in bushy stunt. And there the RNA, he believes, does participate in switching. Probably there’s some RNA associated with those arms in bushy stunt but just not well enough ordered that we see it even as lumpy low resolution density. We, of course, did look at the time and we have looked again since then.

So the concept of hinging certainly came out of the 5.5 angstrom structure. I have to look back and reread the paper, which I haven’t done, to see what further things we got right and wrong. We got wrong the fact that the arm density might well be RNA. We declared that that was our best interpretation and that RNA would be involved in assembly switching, a conclusion that seemed attractive. And we also thought that some density on the undersurface of the subunits that we called the saddle - and it was true of the A and B and C subunits - might also be RNA, because it had a very funny looking curvature. In retrospect it’s just a very curved beta sheet. It’s a very characteristic highly curved beta sheet of the S domain, the undersurface of the S domain. In fact we took a tRNA model and fit a little bit of it into the density and showed it had about the right dimensions. That was a consequence of the fact that nobody had seen so accurately phased a 5.5 angstrom map before, because the icosahedral symmetry really gave you excellent averaging, excellent phases, and as a result a dramatic curvature of beta sheets, which was well known and which we recognized in the P domain, was not still enough in our minds that the possibility that the saddle was a curved beta sheet clearly occurred to us. Then there were other rod-like features of density, probably accentuated perceptibly because of the way we contoured it, the particular sectioning of the contours that we thought were alpha helices, but they’re not. It was just the way the beta strands crisscross. So altogether it’s fair to say that we knew there was a shell domain and we knew about the flat and curved packing and realized there was some switching going on, but other aspects of the molecular interpretation were pretty off base.

SS So you are still at a point where it’s interesting to X-ray crystallographers but not to biologists.

SCH And I would say interesting to protein chemists - that is not just X-ray crystallographers but also to people who were studying proteins from a more physical-chemical or mechanistic point of view. Remember ultracentrifugation and so on was still around as a way of trying to probe protein structure and function and so the people from that area, the physical biochemists, should have been interested. Now in practice they weren’t because physical biochemists initially resented protein crystallography intensely, because it was putting them out of business. They evolved elaborate ideologies for why the structure of protein crystals might not really be interesting.

SS It wasn’t in solution!

SCH And it took an enormous amount of work to make everybody realize that the structure of proteins in crystals was indeed so close, except for some small things having to do with crystal contact perturbation, to the structure in solution, that it taught you most or many of the things that you really needed to know, and that you had to begin with the crystal structure and then worry about some other phenomena happening in solution. So you’re quite right, it was largely to people interested in protein structure whether they were crystallographers or suitably enlightened physical biochemists who would be interested, or people who were trying to think about quasi-equivalence and what it meant. There were people who were interested in virus assembly, a small number who were very fascinated by it.

So I presented the structure at an Erice meeting in either late March or early April. It was Spring break week of '76. There’s a site in Sicily on top of a mountain called Erice. There is a Foundation set up by an Italian group - I’ve forgotten the details - which does mostly physics-oriented summer schools so to speak. Physicists, theoreticians especially, tend to like to gather for a couple of weeks in the year and especially at out of the way places and trade equations. This meeting was in the format of one of those physics "summer schools". There had developed a tradition of having protein crystallography meetings every so often, every 3 or 4 years, so this Erice gathering was a fairly important occasion. I persuaded my Department (at Harvard) to give me travel funds to go talk at it. I had not been invited initially because nobody knew we were doing the structure. And it indeed proved a very important meeting in that respect. One memorable thing that happened to me was a conversation with Herman Watson, who had worked with Kendrew on myoglobin, Kendrew’s senior postdoc, and then a staff scientist in Kendrew’s division at the MRC. He had probably moved by that time to Bristol and become a professor at Bristol. He worked on glycolytic enzymes. I knew Herman from the MRC lab, the Cambridge lab, and knew him to be one of the important "second generation" people in protein crystallography. We were walking from one place to another in Erice, and he said to me: "Well I never believed you would really be a protein crystallographer, but this result says you are" - in other words I had earned my credentials, which he severely doubted up til then. He confessed that he sort of discounted our work, that I wasn’t professional enough. By contrast, Michael Rossmann had solved a number of other structures before he got into virus crystallography.

SS Michael tells the same story about Erice where David Philips didn't understand why he wanted to do viruses because they were not alive - enzymes do things fast. It is nice to have a second view of the meeting.

SCH Probably the same meeting. Michael had just seriously gotten into virus work by then. Since I was a latecomer, the session had originally been organized in such a way that the speakers were Bror Strandberg (who I mentioned before was working on satellite tobacco necrosis virus in Sweden) and Michael (Rossmann) and a couple of other people. So I got squeezed into the session. This also made me realize that some of the professionals in the field thought that I was a kind of Johnny-come-lately upstart. I hadn’t thought that there was any reason to think about issues like competition, but the session got organized in a very odd way that actually did bother me at the time. Probably just the way it happened, because I was added very late to the meeting. Strandberg gave an introduction, which lasted about an hour, and then Michael gave a talk of about 45 minutes, his previously allotted time. Then I was supposed to squeeze my talk, which was the only one that actually had a structure, into 20 minutes. I said I needed half an hour, and they did give me that. Anyway I presented the structure, and it obviously did make a big impression on protein crystallographers, from what Herman told me. But the message at the end of Michael’s talk was they weren’t going to bother with 5.5 angstrom structure but rush on to 3 angstroms and that they would easily beat us out, which did make me terribly nervous. Gerard and I had already worked out the notion for the summer workshop in Paris, we had submitted a first application, I realized I was going to have to start collecting data like nobody’s business, and that we really would have to get it all done in a year and a half. Now in fact, it took the Purdue group quite a lot longer to finish their structure; southern bean was published in 1980 and we’re in '76 at the moment. But I think it’s probably true that I didn't sleep more than 5 hours a night until the end of the summer of '77. So anyway the work got presented. I don’t remember whether there were any other major presentations. At any rate, as soon as I stopped teaching on the first or second of May, I started collecting the high resolution data.

SS So this is '76?

SCH '76 still. Even before that work was published I started working on the high resolution structure, and I worked out exactly how to do it. Each exposure was a half-degree oscillation and took 12 hours, so that I could get a degree of data per day. I needed two derivatives, mercury and platinum - no mercury and uranyl. We had found the 3rd derivative, uranyl, which was better than platinum for high resolution, and we only used mercury and uranyl at high resolution. In retrospect, uranyl marks the calcium sites that we discovered when we looked at the high resolution structure. All I remember was for 3 different data sets, native, mercury and uranyl, I was going to need to collect a degree of data a day, and I could be done by Thanksgiving, and that’s what I did. I think there was hardly a day without data except for those days the X-ray machine needed to have its filament changed. Life was actually nicely disciplined: every 12 hours you changed a crystal, and if you had trouble lining up the crystal, (you lined up the crystal using the X-ray diffraction pattern), it took a little longer; if you slipped a bit, you only got 3 exposures in 2 days rather than 4 exposures in 2 days. Then we also wrote up the 5.5 angstrom structure. It got published, I think, in early 77, in Nature.

During '76-'77 I had teaching leave. Harvard gave junior faculty a teaching leave sometime during their junior faculty period. Gerard (Bricogne) and I had planned the meeting in Paris, which by the summer sometime was confirmed as something that we would get for the summer of '77. We had to go to a planning meeting in Paris for it. I don’t quite remember when that was.

SS But where was he (Gerard Bricogne)?

SCH He was in Erice and we talked there. My plan was to spend, as I remember, the spring semester in Cambridge (England) working with Gerard to put the data in order and to finish off programs and debug things and then transfer the operation to Paris to do the serious work, which is exactly what we did. So by early December I had actually finished all the data collection. Art Olson, who had joined as a post doc, was responsible for processing many of the data. And then I came back for the month of April as I remember and actually finished all the scaling and postrefining and data processing on the NY computer, stuff that now gets done in an evening or less for data sets that are even bigger, took a month easily. And basically a whole month, there was nothing else in my life at all but that’s what computing is about.

SS So you were mainly in England?

SCH I was mainly in England. I spent April doing all this data processing and I promised myself a weekend off if we got an R factor better than 13% and it was 12.8 so I went to Istanbul for a long weekend. And off the record, I think I told you a funny story about my return from Istanbul. To read about this story see the Science and Society section.

And so we went off to Paris.

|

|

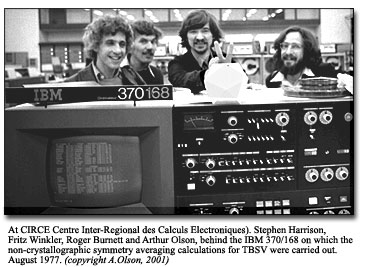

The Paris workshop was terrific. The workshop was not just dedicated to this one project, but it was a workshop where people learned and so on. Roger Burnett came from Basel. He was embarking on a study of the adenovirus hexon with Richard Franklin. So he wanted to learn this new non-crystallographic symmetry methodology, although it wasn’t relevant to that project directly.

SS I hadn't realized he was a student of Michael Rossmann.

SCH He was a student of Michael’s. And Gerard of course was there and Fritz Winkler came for the summer. He had returned for a year to the lab he had come from at ETH(Zurich) and then moved to EMBL which had come into existence about that time. He came for the whole 2 months of the summer, and the woman who would later become his wife showed up also, as did the woman who would become Roger’s first wife. Art Olson was there, and a few others as well as some other people not directly in that workshop who computed at CECAM. One was a woman named Shoshana Wodak who was already pretty well known for computations on protein structure. She had worked with Cy Levinthal as a postdoc or graduate student and had done some of the earliest computer graphics when Levinthal first moved to New York (Columbia). So it was quite pleasant to have 8 weeks that really were intellectually stimulating in various ways. Typical of the organization of European Research Institutes, the computer certainly ran all night but you had to be out by 8 o’clock unless you had special permission to stay around during the evening and which could only be granted once or twice in the summer. Basically we would get out to Orsay early in the morning, work all day, do trial runs, and submit jobs overnight if needed but we had to go home by 8 o'clock, so it did enforce a certain amount of exploration in Paris.

SS It was in Paris?

SCH It was actually in Orsay which is outside of Paris. I was very lucky. I was looking around for some place to sublet for the summer, and by the weirdest of coincidences the daughter of a friend of my mother’s, who lived in Paris with her husband and two kids, was coming to Baltimore with her family to be with her mother’s family. Their apartment, which was right near Cite Universitaire and absolutely perfectly convenient for going out to Orsay either by train or by car, was available and so I rented it for the summer. Roger Burnett and Fritz Winkler shared it with me. We had a great time. It had a two-manual Neupert harpsichord in it and I learned the Italian Concerto that summer in those hours when we weren’t allowed to stay at Orsay.

SS I thought you did restaurants in Paris.

SCH We didn't have that much money. We celebrated one occasion by going to a 3-star restaurant, but most of the 3 star restaurants were closed by August, and we even did a lot of our own cooking. There was a cafe on the Ile St. Louis called Le Flore en l'Ile that had Berthillon ice cream, that’s the best ice cream in Paris.

SS I remember that famous place.

SCH We called it (Le Flore en l'Ile) the "Sorbet Place", and we would go there almost every night - at least several times a week - around midnight and have ice cream. Gerard was staying near the Bastille in an apartment he was sharing with some people he had connections with, and his girlfriend was around for much of the summer. She is now his wife, and they live in Cambridge mostly.

One or two nights we could stay out at Orsay, and so when we did the very first phase refinement averaging cycle, which was probably about 4-5 weeks into the summer and that was sort of the big test - either that was going to work or we were in real trouble. We got permission to stay overnight. It was sort of like your old vision of a huge computer center. There was this enormous room that in retrospect was very impressive in that it had no columns. It somehow had a cantilevered ceiling that was 3 stories high and the walls were completely lined with racks of 7 to 9 track tapes - big 12 inch reels of computer tape because people from all over France would send their tapes in with data. So there were these ladders like in a library that slid along roller bars, and it was a sort of surrealistic huge room with a computer that took up a lot of space at the time, so there was an unbelievable surrealistic atmosphere. You were never allowed in that room except under these special conditions, but because we were there all night, they did let us in to watch the computation, and you could indeed watch the computation because things were reported. It took several hours - altogether one CPU hour which was 4 to 5 elapsed time-hours and of course we were nervous as hell.

In

fact it worked! And it was just great and in the early dawn we went back to

Paris and wandered around for a little while and realizing that it was all sort

of working and that was very nice. And so we got 3 or 4 cycles of this computation

done which was enough to give a really good map. We couldn’t contour it

there. Fortunately there was a nice automatic way of contouring the map using

early computer graphic type things - a flying spot contouring instrument that

had been developed at the MRC at Cambridge. So I flew over to Cambridge with

the tapes, and we had worked out that a very nice photographic technician would

stay up for much of the night before he went off for his annual holiday in Italy

and finish off and take what we did on the flying spot photometer and put it

out on photographic film, which could then be blown up onto something that was

like a visible format, maybe a foot by foot or 8 x 10 size sheet, which you

could then stack up. And so I came back to Paris with these sheets and we stacked

them up and started analyzing them - tracing the polypeptide chain.

In

fact it worked! And it was just great and in the early dawn we went back to

Paris and wandered around for a little while and realizing that it was all sort

of working and that was very nice. And so we got 3 or 4 cycles of this computation

done which was enough to give a really good map. We couldn’t contour it

there. Fortunately there was a nice automatic way of contouring the map using

early computer graphic type things - a flying spot contouring instrument that

had been developed at the MRC at Cambridge. So I flew over to Cambridge with

the tapes, and we had worked out that a very nice photographic technician would

stay up for much of the night before he went off for his annual holiday in Italy

and finish off and take what we did on the flying spot photometer and put it

out on photographic film, which could then be blown up onto something that was

like a visible format, maybe a foot by foot or 8 x 10 size sheet, which you

could then stack up. And so I came back to Paris with these sheets and we stacked

them up and started analyzing them - tracing the polypeptide chain.

SS So at this stage

you could actually begin to trace the polypeptide chain?

SS So at this stage

you could actually begin to trace the polypeptide chain?

SCH At this stage we actually traced the entire S domain in a day because the map was really so good.

SS But had you done that before even for practice?

SCH No

SS It must have been very exciting.

SCH Yes, it was terrific and so we traced the polypeptide chain. We didn't know the sequence yet. It was clear the map was so good we knew we would be able to read the sequence off more or less and make good guesses about every side chain. And either by then or shortly thereafter I had worked out a collaboration with Bob Sauer, who had moved from Harvard where he had been a graduate student with Mark Ptashne, to MIT as an Assistant Professor. He was then a protein chemist entirely, and I worked out a collaboration with him to determine the sequence.

SS But that was protein sequencing.

SCH That was old fashioned protein sequencing. And so he did it and we used the electron density map as a way of linking up the peptides, so he had to do a lot less sequencing. As he would sequence a peptide, we recognized where it was.

SS Could you tell which amino acid was which?

SCH The map was good enough that we could read the sequence off roughly; there were plenty of ambiguities, but we could make a good guess. Almost throughout as the peptides got sequenced usually we were able easily by inspection to recognize where they were.

SS So now you are back in the States?

SCH Now, I’m back in the States. This is a couple of years later. We published it without knowing the correct sequence.

SS So the Nature paper doesn’t have the sequence?

SCH The Nature paper doesn’t have the sequence. That got published in the Journal of Molecular Biology a couple of years later.

SS So let’s not jump to that now.

SCH And so things got done as planned and at the end of the summer we had a nice celebration at La Coupole when it reopened Sept. 1. And then Gerard and I both went to a European crystallography meeting in Oxford and then I went home. I got a chance to present the structure at the European crystallography meeting.

SS But you didn't have anything biologically interesting.

SCH We didn't have very much that was biologically interesting yet, because we hadn’t had a chance properly to analyze things. But for the crystallographers, just the sheer methodology and how to do it was enormously interesting. At this point there was one bit of biology that we did have. The first thing we could immediately see was the density that we thought might be a piece of RNA doing the assembly switching was in fact an arm of the protein and it was perfectly clear then that the notion that an arm would fold up in one situation and not in another was what was going on. That we understood completely. I noticed that already in the map sections as I looked at them when they were coming off the photography in Cambridge, before I flew back.

SS At which stage is this? Is this looking at the contour maps?

SCH This is looking at the contour maps. It was perfectly clear that what we thought was RNA was not and indeed there was likely to be no well-ordered RNA in the structure and therefore our notions of RNA packaging had to be completely revised. And I remember spending a sleepless night about it in Cambridge before I flew back to Paris trying to figure out what it all meant, because that was very clear. I don’t remember how I presented it, but the notion that one had to revise the way you thought about RNA packaging was evident. During that sleepless night I also thought: "Oh my God, I guess I’ve lost a friend", because in his review on nucleosomes, Roger Kornberg had just written about how proteins don’t have arms and now I had just seen for the first time that proteins can have dramatic arms. That certainly changed my concept of how proteins work, seeing that arm, because arms just weren’t things one had seen before. Proteins could have long arms that would be disordered except when they became part of a more complex structure.

I must have said something about how what this now proved was that folding of part of the protein was also part of assembly, in other words that assembly and folding weren’t distinguishable processes for part of the protein. We knew from physical chemistry on turnip crinkle virus that the protein would be a dimer if you took the virus apart. Bushy stunt virus is so stable that you have to take it apart under denaturing conditions, but turnip crinkle virus, which we knew from electron microscopy would be very similar, could be taken apart reversibly and it was known that the soluble form of the protein was a dimer. Now of course one saw the dimers with the P domains clustered. What also became obvious was that the arm had to be unfolded in the free dimer, because it was interacting in ways that depended totally on the assembly itself, and because it actually was disordered on the A and B subunits, and only ordered on the C subunits. There was, in other words, a disorder-order transition that was part of assembly.

There certainly was biology, but people were terribly skeptical. The thing that amazed me was that people were a lot less excited then they ought to have been. My talk was the last one at the session and it was almost lunch time and several people who gave very boring talks, I don’t remember what they were about, went on over their time. There was a mass exodus right before my talk because the cafeteria had just opened and I was really upset.

SS Now these were crystallographers. I think perhaps people who were more a bridge between biology or protein chemists would be more interested.

SCH The notion that you could solve a virus structure had been around since viruses were first crystallized, the late 1930s. Here was indeed the crystallographic announcement that you could do something that people had been talking about for a long time and half the audience didn't stay to hear it. It had me upset - it was the biggest disappointment of the summer.

The other thing that was clear from the questions that were asked was: "Well gee, can you not see RNA just because you’ve done icosahedral averaging?". People didn't understand that the symmetry of the outside of the particle would mean that it was already icosahedral averaged by packing in the crystal and therefore we hadn’t done anything computational; that it would not be possible that there would be a unique RNA structure. To this day, there is still a notion around that the RNA will have some unique conformation and if we can only orient the particle with respect to that unique conformation then we would actually see totally ordered RNA.

But once I saw the answer, I realized that it had to be the answer: that this thing had evolved as a package not to care. That it was actually evolutionarily terribly important that it not care at all about where there were stems and loops in the RNA, because the RNA needed to evolve, and even minor changes in its sequence would almost certainly change dramatically the stability of particular stems and loops.

SS The way you describe that was in evolutionary terms, were you actually thinking about evolution then?

SCH Absolutely

SS Even at that stage?

SCH Not very deeply, of course, but, remember the whole quasi-equivalence business was sort of an evolutionary argument, in other words a genetic economy argument. Basically, one of the constraints on the evolution of a viral genome is to use up as little of it as possible in specifying the coat protein. So in a funny way from Watson and Crick on, the way you thought about design efficiency was fundamental; efficient designs - evolutionarily efficient as well as mechanically efficient. So I remember thinking: "Oh yes, it’s obvious. It’s got to evolve, the design that’s been selected has to be one that basically is completely indifferent to what’s packaged except for some packaging sequence". So then the question became: is there a unique packaging sequence, which was the concept since the origin of assembly in TMV had been defined, or 3 or 4? The packaging sequence might have been a discontinuous set of 3 or 4 sites in a structure like this.

Is the packaging signal recognized by the underside of the S domain, as we came to call it, and we just don’t see that because we see that averaged out so it’s only at 1/60th occupancy (which would be completely invisible) or is it recognized by what we came to call the R domain, namely the positively charged N-terminal portion that projected in a disordered way inward? And if so, was the R domain a folded module that we couldn’t see because it was adopting all kinds of positions? That it was a defined conformation on a flexible tether was my first guess, and we drew it that way. The drawings with the cutaway showing the inner scaffold: there’s kind of a Christmas-tree ball hanging down, a tear-drop shaped thing, because I thought there might even be a domain. And now we know - from Alan Frankel’s work on TAT and TAR and then Lee Gehrke’s work on alfalfa mosaic virus - that it almost certainly it is not that way, that there is some structure for the RNA packaging sequence that is significant, and a peptide, maybe just an arginine rich peptide or something like that, but anyhow an appropriate peptide from the R domain, fits against the RNA structure in some defined way. The three dimensional structure of the recognition interaction is, therefore, likely to be largely in the RNA. But that was a notion, - a revision of our ideas of which is the structured part and which the disordered part of the RNA/protein interaction - that came much later.

I’m sure by the end of that summer I certainly understood that the problem was completely the other way around from the way I thought of it and one had to know where the packaging sequence was recognized. Was it the underside of the S domain or was it the R domain? Was the R domain a folded structure? My prejudice was strongly that it was the R domain and that it was a folded structure and that sort of dictated how I sketched it though I’m pretty sure I said we didn't know. So, I must have said some things like that - not about the R domain because we still didn't know about the R domain. We had traced the chain in such a way that we basically knew there had to be a lot of N-terminal stuff that we were missing. We didn't know it was basic, we didn't know that until the sequence was known, but we certainly knew that there was a fair amount at the N-terminus that we must be missing from the size of the subunit and the fact that the C-terminus of what we traced was visible. It was on the outside.

SS Could you tell it was the C-terminus?

SCH Yes, you could tell the polarity of the chain and that if there was a lot dangling off that it would be proteolytically sensitive. That much we knew. I guess we might even have known a trace of C-terminal sequence from ancient C-terminal sequencing days, probably we were pretty sure the C-terminus we saw was the C-terminus.

So now all of a sudden there was biology if you wish, or what I thought was really interesting biology, but I also realized that I had this terrible task of convincing people that what seemed to them was sort of a boring and uninteresting answer was in fact a very interesting answer. They just wanted to see RNA and see something definite and it just turned out that the solution was much more interesting and sophisticated but would take a lot more effort to work out and that proved really hard. In other words everybody said: "Oh what a terrible disappointment. You can’t see RNA".

The fact that one saw the arm issue, which completely revised how I thought about proteins, was very hard to communicate, and to this day I don’t think people think about it explicitly that way.

At a seminar about transcription factors a couple of months ago, I drew a picture of the complex of many transcription factors - a schematic picture showing factors involved in some sort of complex gene control - and said I thought the first kind of structural question was basically: what are the significant substructures in such a complex? We know that there are stereotypical domains that interact with DNA and that you can draw those, for the hell of it, as little balls that interact with each other when bound to DNA. Indeed, they may interact with each other in a very defined three-dimensional way as I was going to conclude was likely from the NFAT-Fos-Jun complex. But the key question is then: what is going on with the other parts of these same transcription factors? If the DNA binding domain is a compact "ball", is there a flexible stretch of polypeptide chain tethered to another ball that participates in another subassembly? Is there a flexible stretch tethered to another ball that interacts with polymerase or parts of polymerase as a target? In other words, the first key issue is: what are the tethers and how do they link the known DNA-binding domains to other things? That is how I view it, because the arms of the viral subunits do similar things. Don Wiley has also always talked about it that way, again because looking at viruses introduced the concept into his thinking. But from the reaction at the MIT seminar I realized it’s not taught that way or presented that way at all.

The notion that there are likely to be extended, probably flexible, linkers between modules that do things (e.g., bind DNA) is left out. This is, however, a terribly important concept in big macromolecular assemblies, because it allows one part to have a defined specific structure and set of contacts, but to be linked forgivingly to another part that has to do a different specific job at variable distances from the first part. In the case of TBSV, you have to have something that reaches in and adapts to any kinds of RNA stems and loops that are inside, because those may well change in the course of the evolution of the virus. Indeed, a given piece of RNA may have multiple equi-energetic patterns of stems and loops if the exact pattern of stems and loops doesn’t matter certainly, how the stems and loops get packed together inside the virus may not be the same from one particle to another. If you are just going to stuff asparagus spears into a can you won’t do it exactly the same way each time.

SS You assume also that you can package any RNA.